Artificial Intelligence (AI) is already present in many systems today: from voice assistants to intelligent algorithms that evaluate our online shopping or social media behavior. In the future, we will encounter AI systems much more frequently, especially in critical application areas such as autonomous driving, production automation/Industrie 4.0, or medical technology. Here, Dependable AI – that is, controlling the risk of unacceptable failures and functional insufficiencies of AI systems – plays an important role. Fraunhofer IESE advises and supports companies in engineering dependable AI systems and accompanies them throughout the entire lifecycle: from AI strategy via AI development to the assurance of AI including AI validation and AI auditing and compliance with legal and normative requirements.

Dependable AI – holistic engineering of dependable AI systems

From AI strategy via AI development to the assurance of AI including AI validation and AI auditing

What are you interested in?

Do you want to use Artificial Intelligence for your systems? We provide answers to your most important questions. Use the navigation below to skip to the next topic.

- Dependable AI and engineering of dependable AI systems: What does this mean?

- What opportunities does the use of Dependable AI present for businesses?

- Which challenges have to be mastered in the construction, verification/validation, and operation of Dependable AI systems?

- How to develop Dependable AI systems?

- How can Fraunhofer IESE support my company in Dependable AI in concrete terms?

- Why should my company collaborate with Fraunhofer IESE on Dependable AI?

- What references does Fraunhofer IESE have regarding Dependable AI?

Dependable AI and engineering of dependable AI systems:

What does this mean?

Definition

Dependability of a system describes its ability to avoid unacceptable failures in the provision of a service or functionality (Jean-Claude Laprie). Dependability includes, for example, the availability or reliability of a service, but also, in particular, functional safety in order to avoid catastrophic consequences for the users and the environment (i.e., serious or even fatal accidents as a result of failures). But the integrity and security of a system or its maintainability are also quality properties mentioned in the context of dependability.

Accordingly, the engineering of dependable AI systems involves using systems and software engineering principles to systematically guarantee dependability during the construction, verification/validation, and operation of an AI system and, in particular, to also take into account legal and normative requirements right from the start.

To this end, it is important to understand that AI systems are developed in a fundamentally different way than traditional software-based systems. Often, the requirements on the AI system cannot be described completely, and the system must function dependably in an almost infinite application space. This is where established methods and techniques of classical systems and software engineering reach their limits and new, innovative approaches are required. In an AI component, the functionality is not programmed in the traditional way, but created by applying algorithms to data. This results in a model that is merely executed by the software at runtime. One approach in this regard is, for example, Machine Learning (ML) or, more specifically Deep Learning (DL). The resulting model (e.g., an Artificial Neural Network) is generally not comprehensible to humans due to its structure and inherent complexity, and thus the decisions made by an AI system are often not understandable.

Examples

Typical application areas are systems where the dependability of the AI system – i.e., managing the risk of failures or functional insufficiencies – is a concern.

Smart Mobility

Highly automated and autonomous vehicles will completely change the areas of mobility and logistics (transport of goods) and give rise to new digital services. But the risks of such a vehicle must be acceptable for users and for people interacting with the vehicle (such as passers-by). What exactly constitutes an acceptable risk is currently still the subject of debate, e.g., also by ethics committees. Basic elements that are already widely accepted are a positive risk balance and the principle of ALARP (As Low as Reasonably Practicable). Positive risk balance ensures that the risks for life and limb are less than if a human were to operate the system manually. With ALARP, it must be demonstrated that everything that can reasonably be done to minimize risks – depending on feasibility and effort – is actually being done.

Industrie 4.0

AI is one of the pillars of the fourth industrial revolution to realize smart factories that allow individualized products to be created in a highly flexible manner. In addition to scenarios such as Production-as-a-Service or Predictive Maintenance, new smart machines are also being used. Driverless transport systems plan their routes independently and adapt them to demand. Cobots support factory workers and can be trained efficiently for a wide variety of tasks. Nowadays, mechanisms such as light barriers and safety cages are used that completely deactivate the system should a human enter the restricted zone. However, this does not allow the desired level of close interaction with humans to be achieved. In the future, smarter protective functions will also be needed when using such systems and interacting with them so that humans are not exposed to any increased risk.

Digital Health

Today already, physicians are supported by AI systems in the prevention, diagnosis, and therapy of diseases. Here, AI is often responsible for advanced image and data analysis (e.g., in the interpretation of a CT image). It enables analyses in terms of quality and quantity that cannot be achieved by conventional means. Even in surgeries, the first robotic systems are already in use today, but they are controlled by humans. However, the more medical professionals rely on such systems and the higher the degree of autonomy of an AI system in the future, the more important it becomes to control the risk of failures and functional insufficiencies of the AI.

Which role does trustworthiness play in Dependable AI?

Trustworthiness is a prerequisite for people and societies to develop, deploy, and use AI systems (European Commission’s Ethics Guidelines for Trustworthy AI). Similar to aviation or nuclear energy, it is not only the components of the AI system that need to be trustworthy, but the socio-technical system in its overall context. A holistic and systematic approach is required to ensure trustworthiness. According to the guidelines of the European Commission, trustworthy AI consists of three aspects that should be fulfilled throughout the entire lifecycle:

- Compliance with all applicable laws and regulations

- Adherence to ethical principles and values

- Robustness both from a technical and social perspective

Dependability of the AI system refers, in particular, to the aspect of robustness of the AI system from the technical perspective in compliance with applicable laws and standards.

The application fields of AI in general are diverse: AI plays a crucial role in the future scenarios of almost all industries – whether Smart Mobility, Smart Production/Industrie 4.0, Digital Health, Smart Farming, Smart City, or Smart Region. Most scenarios are based on training data-based intelligent systems that cannot be constructed by classical programming due to their complexity. Methods such as Machine Learning are used in an attempt to solve a highly complex problem by training models on a huge amount of data.

AI approaches can be used to either optimize existing processes (such as more efficient maintenance of machines or sorting of workpieces) or to create new user-oriented products and services (such as smart mobility services based on autonomous vehicles).

The potential of AI for the economy has been examined in a wide variety of studies. These studies predict strong growth for the next few years, both for Germany and worldwide. Much of the growth is expected from completely new and more user-oriented products. The potential is huge, but so is the competition in the global marketplace. Countries like the U.S. and China are investing on a large scale and their structures and mentality make them a favorable environment for start-ups and the rapid establishment of new innovative products and services. In order to stand up to the competition, Europe and Germany must look to their own strengths: engineered quality and dependability!

For many of the future scenarios mentioned above, Dependable AI is crucial for success. This applies, for example, to everything related to highly automated or autonomous systems and cognitive systems in safety-critical application areas. Without dependability, there is no trust and trustworthiness; without trust and trustworthiness, there is no acceptance and no success. After all, hardly anyone would use autonomous vehicles or work with adaptive robots in a working environment if these were not fundamentally safe in terms of how they function in their intended environment (such as on the road or in a factory).

How to develop Dependable AI systems?

The safety assurance of a dependable AI system is carried out using various procedures during construction, verification/validation (V&V), and operation depending on the criticality of the functionality.

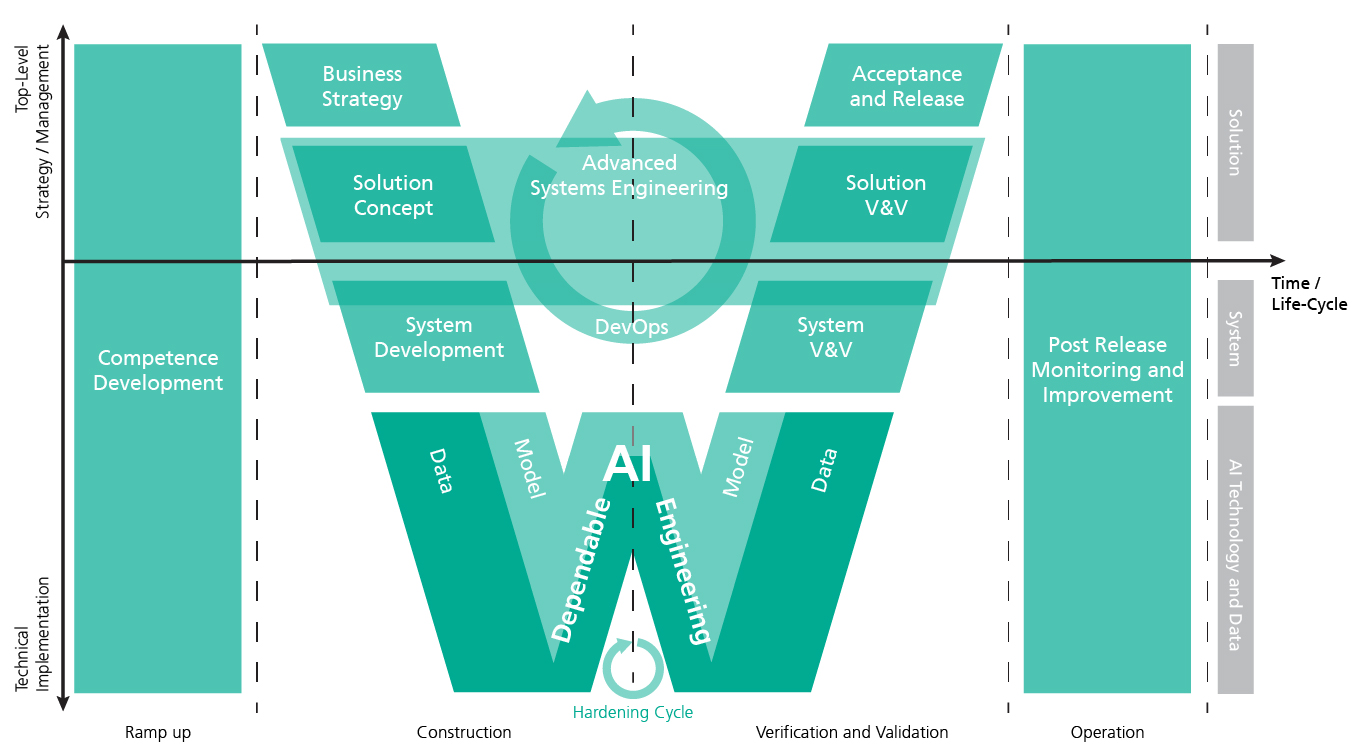

Figure 1 shows Fraunhofer IESE’s view of the fundamental process areas in a W-model. Here, we distinguish different activities in the lifecycle of an AI system (x-axis) and different stakeholders involved in the various phases: from the management level to the operative/technical level. The presentation of the activities and levels is based on VDE-AR-E 2842-61, which defines a reference model for trustworthy AI (developed by DKE, the organization responsible for the development of standards in VDE and DIN). Basically, the process is oriented towards a holistic systems engineering approach. Depending on the application area, it is common that individual system components delivered as a black box by suppliers must be integrated into the overall system.

Competence Development

The development process is typically preceded by a ramp-up phase in which the competencies required in the company are identified and developed. For the management level, it is important in this context to understand both the concrete possibilities offered by AI systems for their own business and the stumbling blocks encountered in implementation. For the operative/technical level, on the other hand, knowledge about concrete techniques/methods/tools for the implementation plays an important role.

Construction and V&V

The overall process starts with the development of the business strategy and the elaboration of the concrete potentials/added values expected from an AI system. From this, the technical requirements on the system level and a concrete solution concept are derived. Depending on the criticality of the system, further analyses are carried out on the system level (e.g., regarding functional safety) in order to take the regulatory and legal requirements into account during development. Following conventional systems engineering approaches (such as ISO/IEC 15288 or 12207), the system is decomposed into subsystems, which can consist of software and hardware. The software subsystems, in turn, consist of classical software components and components that contain AI and represent a normal or safe function of the system.

Today, software components are developed in an agile development process (such as Scrum), which takes place in parallel to hardware development. AI components are usually also developed in a highly iterative process, but this is more oriented to common methods from the field of data science (such as CRISP-DM). This means that the model is improved incrementally/iteratively until it meets the specified quality criteria. In this context, we speak of the evaluation or hardening of the AI model. The individual components of the system are then integrated into the overall system and verified or validated as a whole before the system can be released to the outside world after satisfying defined acceptance and release criteria (e.g., according to required testing and auditing processes). In practice, this overall process for construction and verification/validation is also iterated several times, depending on the size and complexity of the system and the development project.

Operation

The lifecycle of an AI system does not end with the deployment of the system. In the operation phase, the aim is to monitor the performance of the system and learn from the real behavior how to improve the AI system and/or the AI model. In the case of AI systems that continue to learn at runtime, i.e., that change their behavior on the basis of experience gained, further dynamic risk management procedures are also necessary.

Basically, Fraunhofer IESE advises and supports companies holistically in the engineering of dependable AI systems and accompanies them throughout the entire lifecycle: from AI strategy via AI development to AI assurance including AI validation and AI auditing and the fulfillment of legal and normative requirements. We are multidisciplinary in our approach, i.e., we have competencies in the area of software and systems engineering as well as in data science and innovation strategy.

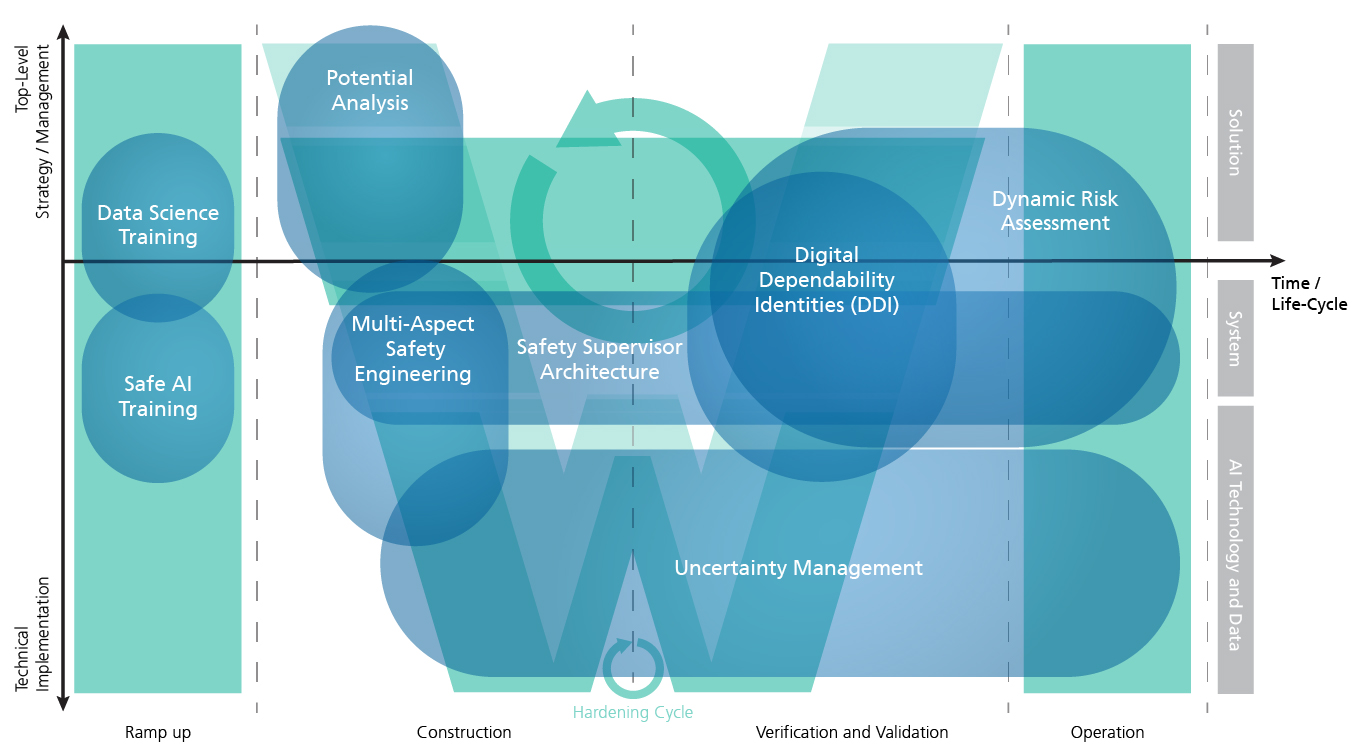

In our research in the context of Dependable AI, we have developed concrete solution components for key challenges, which we are transferring into practice. Figure 2 shows a classification of solution components in the depicted process areas of the W-model.

AI Potential Analysis

The added value of AI for a company is worked out within the framework of potential analyses. Using established templates (such as Value Proposition or BMC), the impact of AI on the business model is elaborated. We compile relevant norms, standards, and quality guidelines for the application context and show which AI systems are already possible today and which requirements will have to be met in the future depending on the criticality. During this process, we also identify the gap between the company’s current setup and its future direction and develop a strategy. In the context of prototypes, promising application scenarios are evaluated in terms of profitability, demand, and technical feasibility (e.g., with regard to the availability of data and the quality of the prediction).

Multi-Aspect Safety Engineering

Especially for the development of highly automated and autonomous systems, not only functional safety (ISO 26262) plays an important role, but also the safety of the intended functionality (SOTIF, ISO/PAS 21448) and the systematic development of a safe nominal behavior. In a model-based approach, we use state-of-the-art safety engineering methods and techniques to model the system holistically, to analyze it, and to manage the identified risks. Assurance or safety case analyses are used to argue that the system is dependable. Evidences are used to argue that regarding the assurance or safety case, the system behaves in such a way that the risk acceptance threshold is not exceeded.

Safety Supervisor Architecture

Another central element for the dependability of a system is the system architecture. Here, architectures exist, for example, that place a kind of safety container around the AI. These Safety Supervisor or Simplex architectures override the AI’s decision if it would lead to dangerous behavior of the AI system. Such approaches are interesting for Machine Learning components in general and particularly important if we are talking about AI systems that continue to learn over their lifetime and can therefore adapt their behavior. Since it is potentially impossible to know at the design time of an AI system which data the AI will use as a basis for further learning, appropriate guardrails for the behavior must be defined and incorporated into the system.

Digital Dependability Identities (DDI)

A Digital Dependability Identity (DDI) is an instrument for centrally documenting all specifications that are relevant for system dependability in a uniform and exchangeable manner and for delivering this specification together with a system or system part. This is especially important if system parts from different manufacturers/suppliers along the supply chain have to be integrated, and/or if dynamic integration of different system parts takes place at runtime (as in collaborative driving). The backbone of DDI is an assurance case that structures and links all specifications to argue under which conditions the system will operate dependably. The nature of the evidences in the argumentation chain varies widely. They may concern the safety architecture or the management of uncertainties of the AI component, for example the assurance that data is used that sufficiently covers the intended context of use.

Dynamic Risk Management (DRM)

DRM is an approach for the continuous monitoring of the risk of a system and its planned behaviors as well as of the safety-related capabilities. DRM monitors the risk, optimizes the performance and ensures safety by means of continuous management informed by runtime models. One ingredient of DRM of AI-based systems can also be the output of an Uncertainty Wrapper.

Uncertainty Management

Uncertainty is an inherent problem in data-based solutions and cannot be ignored. The Uncertainty Wrapper is a holistic, model-independent approach for the identification of situationally reliable predictions of uncertainties in AI components. These uncertainties may stem not only from the AI model itself, but also from the input data and the context of use. The Uncertainty Wrapper can be used, on the one hand, during the development of the AI model to manage the identified uncertainties by taking appropriate measures and making the model safer. On the other hand, at runtime of the AI system, the wrapper enables a holistic view of the current uncertainty of the AI component. This assessment can in turn be used by a safety container to override the AI and bring the system to a lower-risk state.

Training

Last but not least, we are also active in providing qualification for the corresponding competencies within the company: from Enlightening Talks for management via continuing education and training programs on becoming a Data Scientist to special training for dependable AI systems (e.g., Safe AI seminar).

Artificial Intelligence (AI) and Machine Learning (ML) have great potential to improve existing products and services – or even to enable completely new products and services. However, assuring and verifying essential quality properties of AI systems, and in particular properties relating to dependability, is a major challenge. Those who find and implement adequate solutions in this regard have a clear competitive edge! We are convinced: With our methods and solution components, we can actively and effectively support you on this path!

Fraunhofer IESE advises and supports companies holistically in engineering dependable AI systems:

End-to-end: We accompany companies throughout the entire lifecycle: from AI strategy via AI development to AI assurance including AI validation and AI auditing and the fulfillment of legal and normative requirements.

Multidisciplinary: We are multidisciplinary in our approach, i.e., we have competencies in software and systems engineering as well as in data science and innovation strategy. Accordingly, we offer a one-stop service where all relevant competencies regarding Dependable AI are available.

Innovative: As a Fraunhofer Institute, we are represented in innovative research projects for Dependable AI at the German and EU level, so we know the current state of the art and state of the practice regarding processes, technologies, and tools for Dependable AI.

Informed: We are involved in roadmap and standardization activities on Dependable AI through associations and standardization bodies, so we have access to current discussions and are aware of future changes of the legal and normative requirements.

One-stop provider: As a member of the Fraunhofer Big Data and Artificial Intelligence (AI) Alliance, we have access to extensive resources (data scientists, software packages, and computing infrastructure). This allows us to act as a one-stop provider and offer even more complex projects in the context of Dependable AI.

Our contributions to the successful implementation of Dependable AI

AI Potential Analysis

We support companies in identifying potentials and risks of AI systems in critical use cases and in creating prototypes. This helps to avoid bad investments and opportunity costs.

Multi-Aspect Safety Engineering

We develop safety arguments for safety-critical AI systems, taking into account current requirements and those under development. This ensures that the safety argumentation is future-proof based on the current state of the art and state of the practice.

Safety Supervisor Architecture

We develop AI architectures that catch risks as early as possible and lead to a dependable AI system. This enables innovative AI-based solutions to be used even in safety-critical use cases.

Digital Dependability Identities (DDI)

We specify under which conditions a critical component is dependable and integrate this into the system’s safety argumentation. This allows critical system parts from different manufacturers/suppliers to be integrated and even to be combined dynamically at runtime.

Dynamic Risk Management (DRM)

We develop mechanisms for dynamically monitoring the risk of critical AI systems at runtime and reducing potential hazards systematically. This allows interacting efficiently and effectively with the AI system as well as managing the risk in self-learning AI systems.

Uncertainty Management

We provide software solutions for managing uncertainties of an AI component. This allows uncertainties to be identified and made controllable at both development time and runtime.

Training

We identify knowledge gaps in the company with regard to Dependable AI and develop strategies for building up the required competencies. This allows necessary AI competencies to be built up sustainably at all levels of the company and strengthens its ability to innovate.

Publications from the area of Dependable AI

Publications of Fraunhofer IESE around the topic area “Dependable AI”

Model-based Safety Engineering

- Feth, P., Adler, R., Fukuda, T., Ishigooka, T., Otsuka, S., Schneider, D., ... & Yoshimura, K. (2018, September). „Multi-aspect safety engineering for highly automated driving“. In International Conference on Computer Safety, Reliability, and Security (pp. 59-72). Springer, Cham.

- Domis, Dominik. „Integrating fault tree analysis and component-oriented model-based design of embedded systems“, Verlag Dr. Hut, 2012.

- Kaiser, B., Liggesmeyer, P., & Mäckel, O. (2003, October). „A new component concept for fault trees“. In Proceedings of the 8th Australian workshop on Safety critical systems and software-Volume 33 (pp. 37-46).

- Kaiser, B., Schneider, D., Adler, R., Domis, D., Möhrle, F., Berres, A., ... & Rothfelder, M. (2018, June). „Advances in component fault trees“. In Proceedings of the 28th European Safety and Reliability Conference (ESREL).

- Reich, J, et al. Herausforderungen und Lösungsansätze für die durchgängige Freigabeargumentation von automatisierten Fahrfunktionen: Erste Ergebnisse aus dem BMWi "V&V Methoden"-Projekt

- Kläs, M., Adler, R., Jöckel, L., Gross, J., Reich, J., "Using Complementary Risk Acceptance Criteria to Structure Assurance Cases for Safety-Critical AI Components", AI Safety 2021 at International Joint Conference on Artificial Intelligence (IJCAI), Montreal, Canada, 2020.

- Kläs, M., Adler, R., Heidrich, J., “Testen im Zeitalter von KI”, Informatik Aktuell, 2020

Hardening of ML Models – AI and Data Engineering

- Adler, R., et al., “Hardening of Artificial Neural Networks for Use in Safety-Critical Applications - A Mapping Study”, https://arxiv.org/abs/1909.03036, 2019.

- Kläs, M., “Towards Identifying and Managing Sources of Uncertainty in AI and Machine Learning Models - An Overview,” https://arxiv.org/abs/1811.11669, 2018.

- Kläs, M., Vollmer, A. M., "Uncertainty in Machine Learning Applications – A Practice-Driven Classification of Uncertainty," First International Workshop on Artificial Intelligence Safety Engineering (WAISE 2018), Västerås, Sweden, 2018.

- Mohammed Naveed Akram, Pascal Gerber and Daniel Schneider, “Hardening of CNN-LSTM powered by Explainable AI techniques”. Submitted to the safecomp WAISE Workshop 2021.

- Jöckel, L., Kläs, M., "Increasing Trust in Data-Driven Model Validation - A Framework for Probabilistic Augmentation of Images and Meta-Data Generation using Application Scope Characteristics", International Conference on Computer Safety, Reliability and Security (SAFECOMP 2019), Turku, Finland, 2019.

- Jöckel, L., Kläs, M., Martínez-Fernández, S., "Safe Traffic Sign Recognition through Data Augmentation for Autonomous Vehicles Software“, 19th IEEE International Conference on Software Quality, Reliability and Security Companion (QRS-C), Sofia, Bulgaria, 2019.

Operation – Systematic Engineering of Runtime Safety Assurance

- Kläs, M., Adler, R., Sorokos, I., et al., “Handling Uncertainties of Data-Driven Models in Compliance with Safety Constraints for Autonomous Behaviour”, European Dependable Computing Conference (EDCC 2021), 2021

- Trapp, Mario; Schneider, Daniel; Weiss, Gereon (2018): Towards Safety-Awareness and Dynamic Safety Management. In : 2018 14th European Dependable Computing Conference (EDCC). Iasi, pp. 107–111.

Schneider, Daniel; Trapp, Mario (2018): B-space: dynamic management and assurance of open systems of systems. In J Internet Serv Appl 9 (1). DOI: 10.1186/s13174-018-0084-5. - Dynamic Risk Management Overview Videos/Webinars:

o https://www.youtube.com/watch?v=uyxCIViOb40 (German, 2020)

o https://www.youtube.com/watch?v=HY9NrJHLxRI (English, 2020)

o https://www.youtube.com/watch?v=Vdn-TCGxzgA (Platooning Demonstration)

- ·Schneider, Daniel; Trapp, Mario (2013): Conditional Safety Certification of Open Adaptive Systems. In ACM Trans. Auton. Adapt. Syst. 8 (2), pp. 1–20. DOI: 10.1145/2491465.2491467.

Kabir, Sohag, et al. "A runtime safety analysis concept for open adaptive systems". International Symposium on Model-Based Safety and Assessment. Springer, Cham, 2019. - Reich, Jan, et al. "Engineering of Runtime Safety Monitors for Cyber-Physical Systems with Digital Dependability Identities". International Conference on Computer Safety, Reliability, and Security. Springer, Cham, 2020.

- Feth, Patrik (2020): Dynamic Behavior Risk Assessment for Autonomous Systems. Dissertation. Technical University Kaiserslautern, Germany.

- Reich, Jan, and Mario Trapp. "SINADRA: Towards a Framework for Assurable Situation-Aware Dynamic Risk Assessment of Autonomous Vehicles". 2020 16th European Dependable Computing Conference (EDCC). IEEE, 2020.

- Reich, Jan, et al. “Towards a Software Component to Perform Situation-Aware Dynamic Risk Assessment for Autonomous Vehicles”. Submitted to DREAMS Workshop (Dynamic Risk Management for Autonomous Systems) co-located with European Dependable Computing Conference 2021.

- Kläs, M., Sembach, L., "Uncertainty Wrappers for Data-driven Models - Increase the Transparency of AI/ML-based Models through Enrichment with Dependable Situation-aware Uncertainty Estimates," Second International Workshop on Artificial Intelligence Safety Engineering (WAISE 2019), Turku, Finland, 2019.

- Kläs, M., Jöckel, L., "A Framework for Building Uncertainty Wrappers for AI/ML-based Data-Driven Components," Third International Workshop on Artificial Intelligence Safety Engineering (WAISE 2020), Lisbon, Portugal, 2020.

- Jöckel, L., Kläs, M., "Could We Release AI/ML Models from the Responsibility of Providing Dependable Uncertainty Estimates? - A Study on Outside-Model Uncertainty Estimates," International Conference on Computer Safety, Reliability and Security (SAFECOMP 2021), 2021.

- Aslansefat, Koorosh, Ioannis Sorokos, Declan Whiting, Ramin Tavakoli Kolagari, and Yiannis Papadopoulos. "SafeML: Safety Monitoring of Machine Learning Classifiers Through Statistical Difference Measures", In International Symposium on Model-Based Safety and Assessment, pp. 197-211. Springer, Cham, 2020.