The automation of technical systems all the way to autonomy is one of the current megatrends in industry. Artificial Intelligence (AI) enables the realization of that autonomy. These systems are often part of a larger ecosystem where the failure of such an autonomous system can cause economic or even personal damage. This requires special measures to ensure the functional safety of systems with AI components. Existing methods prescribed by standards such as IEC 61508 are only partly applicable in this context, or do not cover the full scope of the issue of functional safety of autonomous systems. As one of the globally leading research departments on the topic of Functional Safety, we are collaborating with our industry partners in national and international projects as well as bilaterally to solve these problems.

Safety for Autonomous Systems

Trustworthy Deep Learning

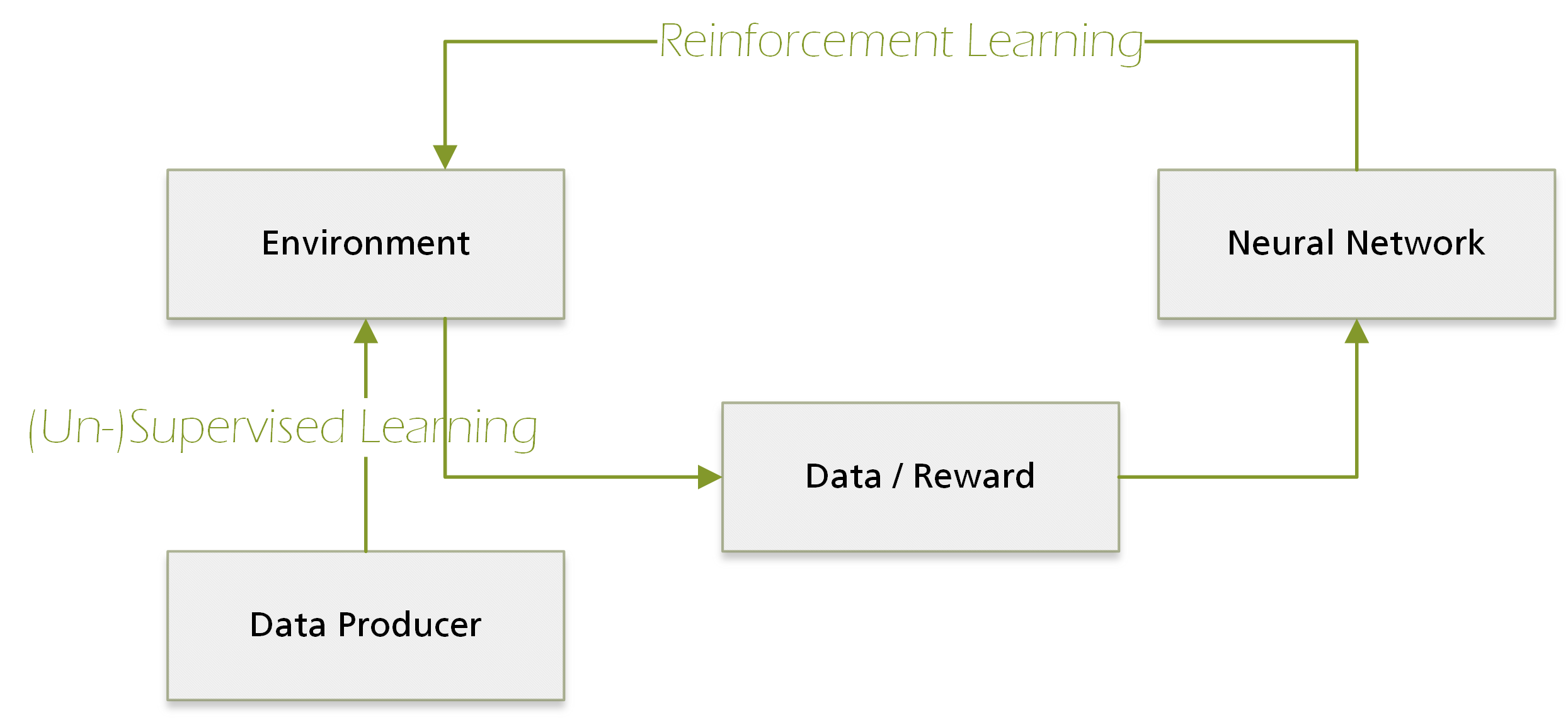

The implementation of autonomous systems in open environments will require the use of Deep Learning as well as artificial neural networks. These methods show excellent performance for many tasks, such as the classification of objects in images. Due to the lack of an explicit specification of the underlying algorithms (Machine Learning methods learn from examples or from trying), existing verification methods, such as code reviews, can no longer be used. In a current cooperation project funded by the state of Rhineland-Palatinate, we are collaborating with the Fraunhofer Institute for Industrial Mathematics ITWM and the German Research Center for Artificial Intelligence (DFKI) to develop a catalog of methods and a methodology based on them to increase the trustworthiness of Deep Learning applications.

We put these methods to use in the context of your application and thereby increase the trustworthiness of the functionality you are implementing by means of Deep Learning.

Dynamic Risk Management

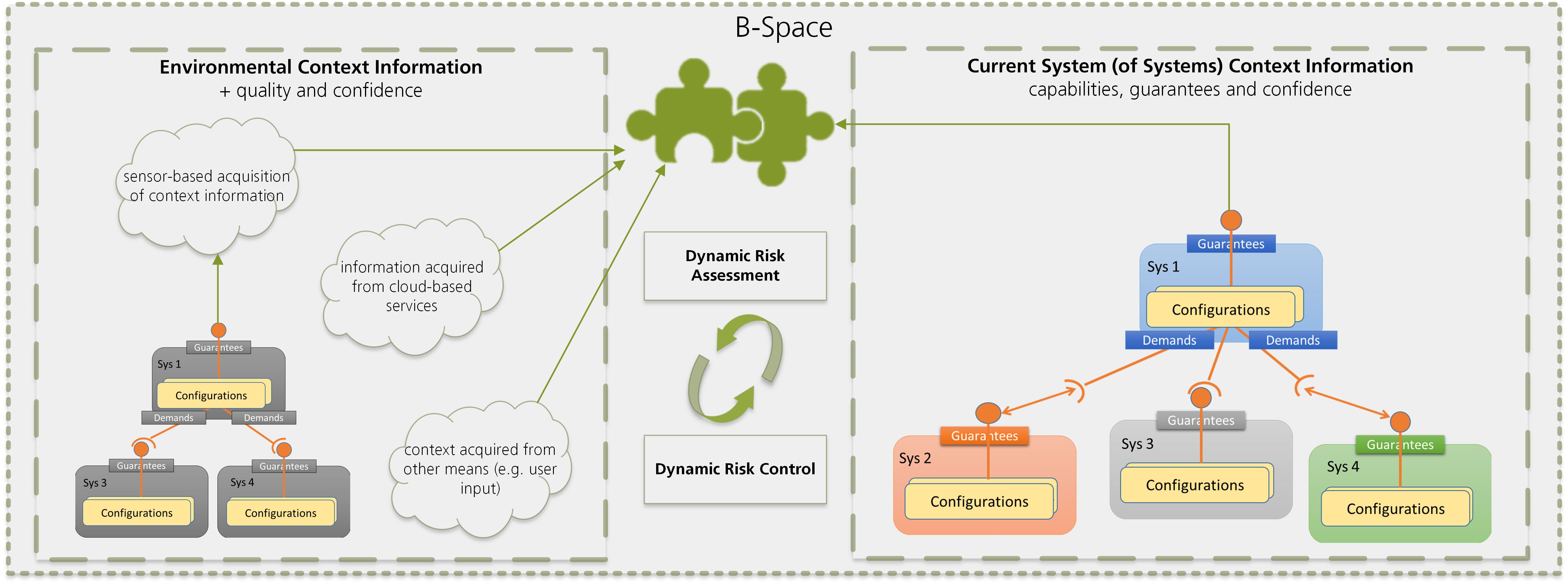

In open environments, situations can arise that were not considered during the development of a technical system. The ability to deal with such situations and to show dependable behavior even in such circumstances is called resilience. Under the concept of dynamic risk management, we are developing methods for risk-oriented runtime reconfiguration to increase resilience. We are doing this in a European research project as well as in the context of the Resilient Intelligence Think Lab ENARIS®. In order to enable the use of such methods in the area of safety-critical systems, particular attention must also be paid to the possibility of certification. To this end, the dynamic risk management concepts are based on our already established approaches to runtime safety certificates.

For your networked, safety-critical applications in open contexts, the transfer of our work enables high-performance solutions with guaranteed safety properties.

Multi-Aspect Safety Engineering

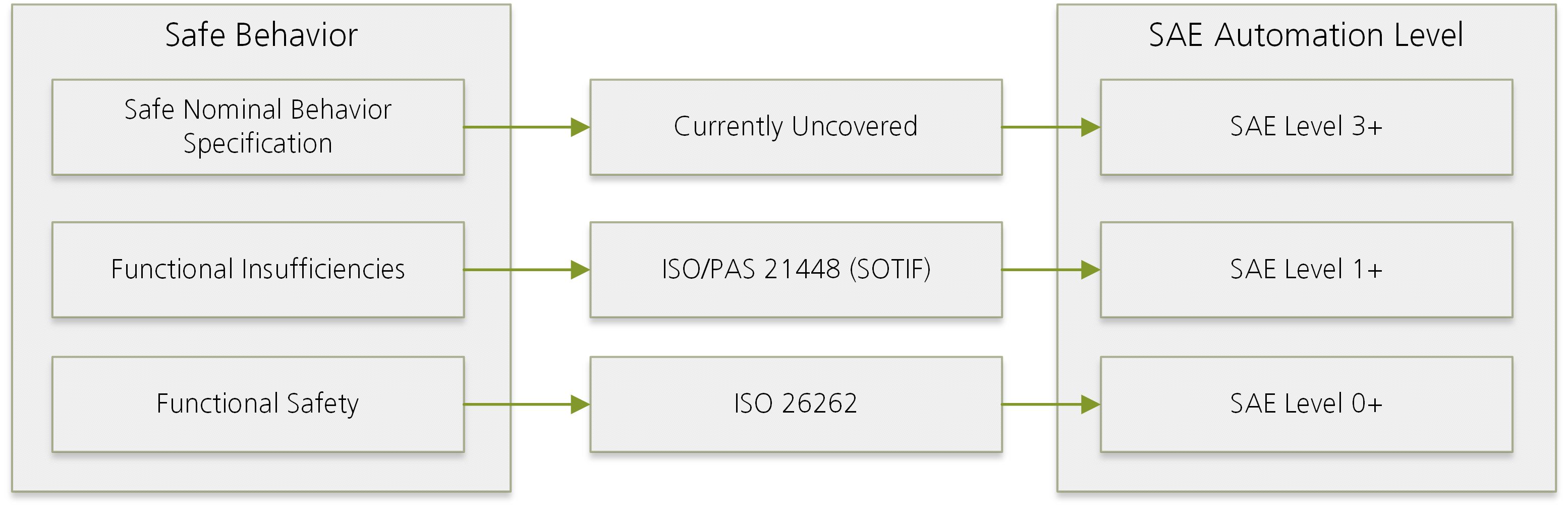

With the emergence of increasingly automated and networked systems, the current situation is that established methods and standards for the development and assurance of safety are unable to keep pace and do not offer adequate support. New methods and standards are therefore needed to define the normative requirements for critical systems. Only by comparing a technical system with existing standards can it be determined whether this system has the required product maturity. Currently, the realization of systems with higher levels of automation is thus often not prevented by technical challenges, but rather by open issues regarding the certification and release of these systems. To deal with these challenges, we are actively involved together with OEMs and suppliers from the automotive industry in one of the successor projects of the PEGASUS project.

We bring our competence in the development of current standards and beyond to your projects for the market-ready implementation of systems with a high level of automation.

This could also interest you:

Find out more about the potential of autonomous systems