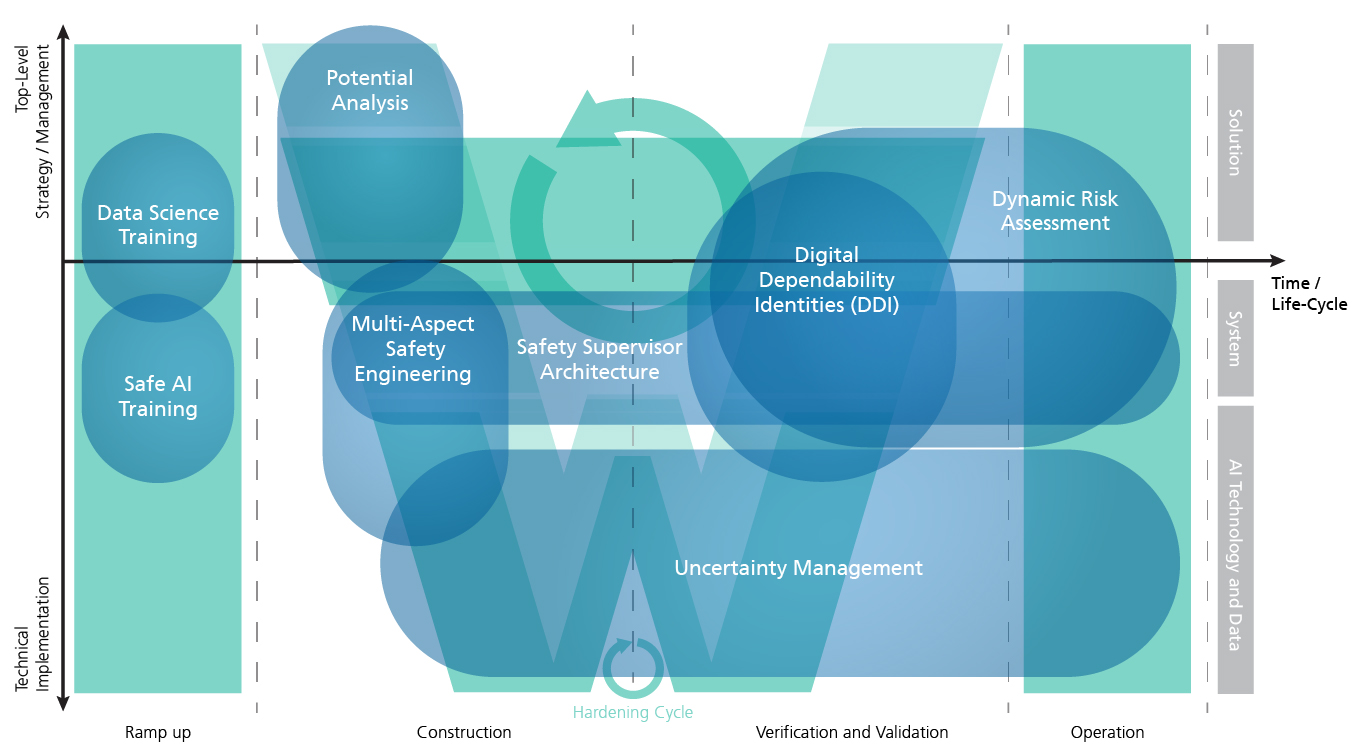

AI Potential Analysis

The added value of AI for a company is worked out within the framework of potential analyses. Using established templates (such as Value Proposition or BMC), the impact of AI on the business model is elaborated. We compile relevant norms, standards, and quality guidelines for the application context and show which AI systems are already possible today and which requirements will have to be met in the future depending on the criticality. During this process, we also identify the gap between the company’s current setup and its future direction and develop a strategy. In the context of prototypes, promising application scenarios are evaluated in terms of profitability, demand, and technical feasibility (e.g., with regard to the availability of data and the quality of the prediction).

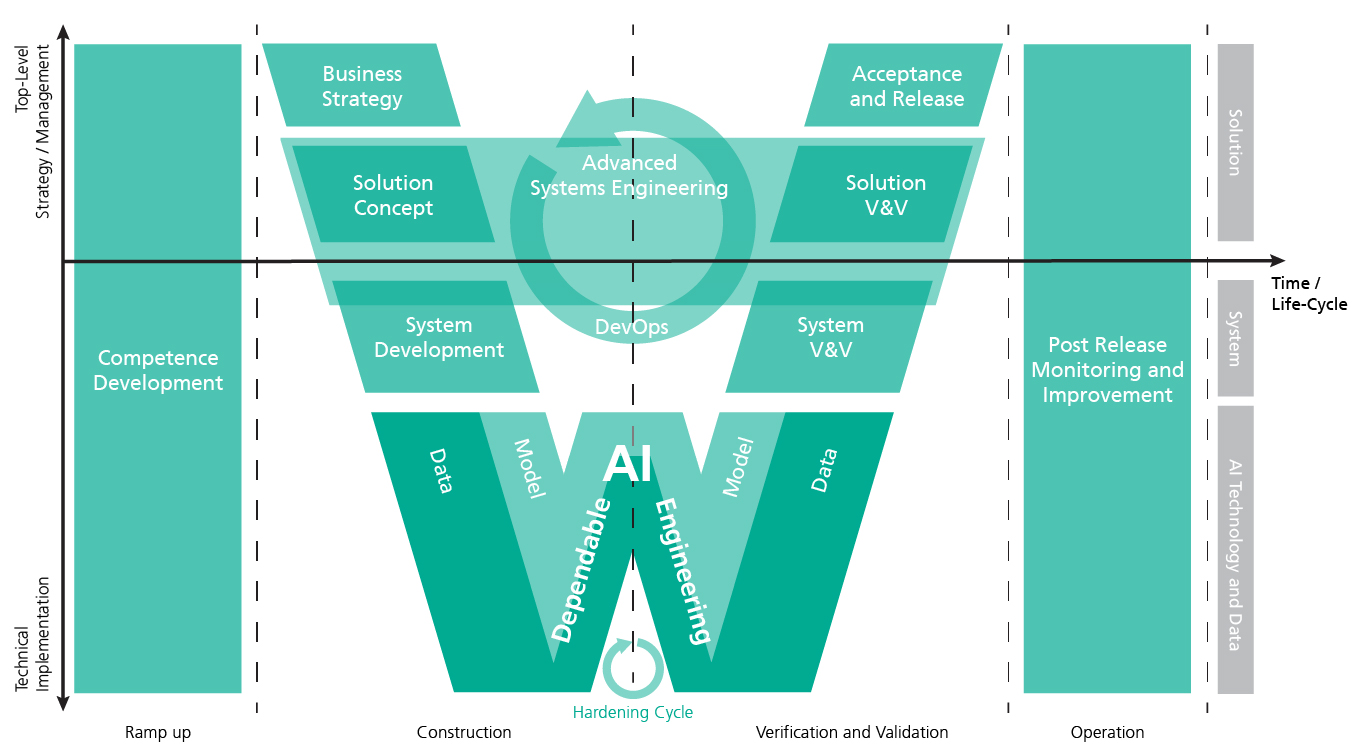

Multi-Aspect Safety Engineering

Especially for the development of highly automated and autonomous systems, not only functional safety (ISO 26262) plays an important role, but also the safety of the intended functionality (SOTIF, ISO/PAS 21448) and the systematic development of a safe nominal behavior. In a model-based approach, we use state-of-the-art safety engineering methods and techniques to model the system holistically, to analyze it, and to manage the identified risks. Assurance or safety case analyses are used to argue that the system is dependable. Evidences are used to argue that regarding the assurance or safety case, the system behaves in such a way that the risk acceptance threshold is not exceeded.

Safety Supervisor Architecture

Another central element for the dependability of a system is the system architecture. Here, architectures exist, for example, that place a kind of safety container around the AI. These Safety Supervisor or Simplex architectures override the AI’s decision if it would lead to dangerous behavior of the AI system. For this purpose, it is important to know, for example, the uncertainty of the AI recommendation under certain conditions of use and to determine the risk of an incorrect decision based on this. Such approaches are interesting for Machine Learning components in general and particularly important if we are talking about AI systems that continue to learn over their lifetime and can therefore adapt their behavior. Since it is potentially impossible to know at the design time of an AI system which data the AI will use as a basis for further learning, appropriate guardrails for the behavior must be defined and incorporated into the system.

Digital Dependability Identities (DDI)

A Digital Dependability Identity (DDI) is an instrument for centrally documenting all specifications that are relevant for system dependability in a uniform and exchangeable manner and for delivering this specification together with a system or system part. This is especially important if system parts from different manufacturers/suppliers along the supply chain have to be integrated, and/or if dynamic integration of different system parts takes place at runtime (as in collaborative driving). The backbone of DDI is an assurance case that structures and links all specifications to argue under which conditions the system will operate dependably. The nature of the evidences in the argumentation chain varies widely. They may concern the safety architecture or the management of uncertainties of the AI component, for example the assurance that data is used that sufficiently covers the intended context of use.

Dynamic Risk Management (DRM)

DRM is an approach for the continuous monitoring of the risk of a system and its planned behaviors as well as of the safety-related capabilities. DRM monitors the risk, optimizes the performance, and ensures safety by means of continuous management informed by runtime models. One ingredient of DRM of AI-based systems can also be the output of an Uncertainty Wrapper.

Uncertainty Management

Uncertainty is an inherent problem in data-based solutions and cannot be ignored. The Uncertainty Wrapper is a holistic, model-independent approach for the identification of situationally reliable predictions of uncertainties in AI components. These uncertainties may stem not only from the AI model itself, but also from the input data and the context of use. The Uncertainty Wrapper can be used, on the one hand, during the development of the AI model to manage the identified uncertainties by taking appropriate measures and making the model safer. On the other hand, at runtime of the AI system, the wrapper enables a holistic view of the current uncertainty of the AI component. This assessment can in turn be used by a safety container to override the AI and bring the system to a lower-risk state.

Training

Last but not least, we are also active in providing qualification for the corresponding competencies within the company: from Enlightening Talks for management via continuing education and training programs on becoming a Data Scientist to special training for dependable AI systems (e.g., Safe AI seminar).

Back to Overview